背景

文档搜索,文档索引、检索,开源Elasticsearch。

基础概念

Mapping,索引类型,分词,搜索行为。

不在支持索引内多文档类型,并删除文档类型这一概念。

https://www.elastic.co/guide/en/elasticsearch/reference/current/removal-of-types.html

Schedule for removal of mapping types

This is a big change for our users, so we have tried to make it as painless as possible. The change will roll out as follows:Elasticsearch 5.6.0

- Setting

index.mapping.single_type: trueon an index will enable the single-type-per-index behaviour which will be enforced in 6.0. - The

joinfield replacement for parent-child is available on indices created in 5.6.

Elasticsearch 6.x

- Indices created in 5.x will continue to function in 6.x as they did in 5.x.

- Indices created in 6.x only allow a single-type per index. Any name can be used for the type, but there can be only one. The preferred type name is

_doc, so that index APIs have the same path as they will have in 7.0:PUT {index}/_doc/{id}andPOST {index}/_doc - The

_typename can no longer be combined with the_idto form the_uidfield. The_uidfield has become an alias for the_idfield. - New indices no longer support the old-style of parent/child and should use the

joinfield instead. - The

_default_mapping type is deprecated. - In 6.8, the index creation, index template, and mapping APIs support a query string parameter (

include_type_name) which indicates whether requests and responses should include a type name. It defaults totrue, and should be set to an explicit value to prepare to upgrade to 7.0. Not settinginclude_type_namewill result in a deprecation warning. Indices which don’t have an explicit type will use the dummy type name_doc.

Elasticsearch 7.x

- Specifying types in requests is deprecated. For instance, indexing a document no longer requires a document

type. The new index APIs arePUT {index}/_doc/{id}in case of explicit ids andPOST {index}/_docfor auto-generated ids. Note that in 7.0,_docis a permanent part of the path, and represents the endpoint name rather than the document type. - The

include_type_nameparameter in the index creation, index template, and mapping APIs will default tofalse. Setting the parameter at all will result in a deprecation warning. - The

_default_mapping type is removed.

Elasticsearch 8.x

- Specifying types in requests is no longer supported.

- The

include_type_nameparameter is removed.

动态索引(Dynamic mapping)

- 动态字段映射:直接索引数据,若无此索引、索引字段则会自动创建索引并将数据使用字段默认兼容类型(数字推断为long);

curl -X PUT “localhost:9200/data/_doc/1?pretty” -H ‘Content-Type: application/json’ -d'{ “count”: 5 }’

- 动态模板:可以为动态字段配置映射自定义规则

因为默认的映射比较简单粗暴可能会无法满足使用,使用动态模板则会尽量去满足我们的使用场景,动态模板大致意思就是按照我们设定的规则去进行一些处理,例如将日期字符转换为日期类型,将浮点字符转换为钱类型,匹配规则大致有:match_mapping_type, match, match_pattern, unmatch, path_match, path_unmatch,有提到的字符类型匹配、字段名称匹配等等。

实际使用的时候由于ES的索引字段类型无法更改(索引索引,若是改了字段那必定之前的索引将无效,所以这里要谨记,若是改了字段那么只会对新数据有效而老数据则无效,所以当面对海量数据时为了该死的新需求重建索引时就会考验你的右手抖动厉害了,当然这块会有别的方案也不是不能搞,但是类比数据库的重建索引,数量小了还好,大量数据你懂的),所以最好是为了最好的搜索效果将字段提前设置好自己想要的类型(肯定是自定义类型最能满足自己的需求了),当然实际工作中是定义好可以确定的字段、配置动态模板、动态字段映射三种一起使用,因为总有新需求。

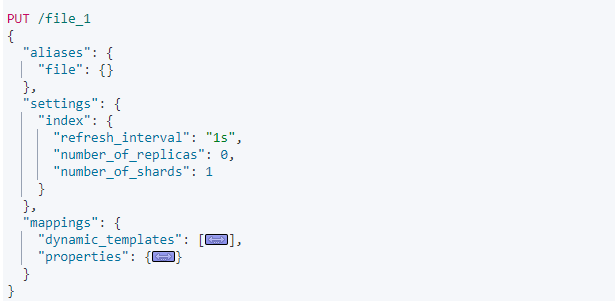

PUT /file_1

{

"aliases": {

"file": {}

},

"settings": {

"index": {

"refresh_interval": "1s",

"number_of_replicas": 0,

"number_of_shards": 1

}

},

"mappings": {

"dynamic_templates": [

{

"longs": {

"match": "*_l",

"mapping": {

"type": "long"

}

}

}

],

"properties": {

"tenantid": {

"type": "keyword"

},

"creatorid": {

"type": "keyword"

},

"creator": {

"type": "text"

},

"creationtime": {

"type": "date",

"format": "yyyy-MM-dd'T'HH:mm:ss.SSSZ||yyyy-MM-dd HH:mm:ss.SSSZ||yyyy-MM-dd HH:mm:ss||yyyy-MM-dd||yyyy/MM/dd HH:mm:ss||yyyy/MM/dd||MM/dd/yy hh:mm:ss a||MM/dd/yyyy HH:mm:ss||strict_date_optional_time||epoch_millis"

},

"lastmodifierid": {

"type": "keyword"

},

"lastmodificationtime": {

"type": "date",

"format": "yyyy-MM-dd'T'HH:mm:ss.SSSZ||yyyy-MM-dd HH:mm:ss.SSSZ||yyyy-MM-dd HH:mm:ss||yyyy-MM-dd||yyyy/MM/dd HH:mm:ss||yyyy/MM/dd||MM/dd/yy hh:mm:ss a||MM/dd/yyyy HH:mm:ss||strict_date_optional_time||epoch_millis"

},

"lastmodifier": {

"type": "text"

},

"deleterid": {

"type": "keyword"

},

"deletiontime": {

"type": "date",

"format": "yyyy-MM-dd'T'HH:mm:ss.SSSZ||yyyy-MM-dd HH:mm:ss.SSSZ||yyyy-MM-dd HH:mm:ss||yyyy-MM-dd||yyyy/MM/dd HH:mm:ss||yyyy/MM/dd||MM/dd/yy hh:mm:ss a||MM/dd/yyyy HH:mm:ss||strict_date_optional_time||epoch_millis"

},

"deleter": {

"type": "text"

},

"id": {

"type": "keyword"

},

"name": {

"type": "text"

},

"extension": {

"type": "keyword"

},

"type": {

"type": "keyword"

},

"Size": {

"type": "long"

},

"folderid": {

"type": "keyword"

},

"path": {

"type": "text"

}

}

}

}

上图是在kibana中操作进行,下面介绍下大概意思。

aliases 别名,别名可以用来常规操作,更重要的是索引变更的时候索引迁移,使用file,file_1都可以。

refresh_interval 刷新刷新间隔,这个时间代表的是你最快可以搜索到这个数据的时间间隔,大概就是你索引一条数据,会先刷新索引然后刷入file cache再到落盘,这个1s就是刷新索引使数据可被搜索到但是在刷入file cache之前,即使在避开IO磁盘前但是这个操作仍是有性能开销,所以这个时间根据各自需要设置。所以这也是ES与数据库的区别之一,ES不是数据库!ES不是数据库!ES不是数据库!,无事务和数据索引到可被使用的时间。

number_of_replicas 副本数,我这里设置为0是因为我是单节点并且是单硬盘,搞副本就是一个笑话,这里就算设置不为0ES会检测到单节点而显示红色提示,如果你搞集群可以根据需求设置,副本多少根据情况设置。

number_of_shards 分片数,类似于数据库的表分区。分片数据每次检索时都会扫描所以多少根据实际情况设置,单个分片大小也有限制并且不能动态扩容所以多想想在设置(每到这个时候就很蛋疼,因为一旦更改就以为着要索引迁移😭)。

dynamic_templates 是动态模板。这里的意思是凡是木有定义的字段且后缀是 l就会被认为是long类型。

properties 内就是定义的字段,里面的type就是类型,我这里用的是默认的keyword、text、date,keyword 表示不分词(分词我这里泛指因为ES对索引内容的处理不单单是分词)需要准确匹配的就可以用它表示,比如id;date 表示时间类型,下面那一坨是为了兼容数据内容,因为如果你定义的字段内容不能兼容(转换)ES的时间类型就直接索引失败了;text 就是用的ES默认的分词器

GET _analyze

{

"analyzer": "standard",

"text": "我爱我的祖国"

}

效果如下

{

"tokens" : [

{

"token" : "我",

"start_offset" : 0,

"end_offset" : 1,

"type" : "<IDEOGRAPHIC>",

"position" : 0

},

{

"token" : "爱",

"start_offset" : 1,

"end_offset" : 2,

"type" : "<IDEOGRAPHIC>",

"position" : 1

},

{

"token" : "我",

"start_offset" : 2,

"end_offset" : 3,

"type" : "<IDEOGRAPHIC>",

"position" : 2

},

{

"token" : "的",

"start_offset" : 3,

"end_offset" : 4,

"type" : "<IDEOGRAPHIC>",

"position" : 3

},

{

"token" : "祖",

"start_offset" : 4,

"end_offset" : 5,

"type" : "<IDEOGRAPHIC>",

"position" : 4

},

{

"token" : "国",

"start_offset" : 5,

"end_offset" : 6,

"type" : "<IDEOGRAPHIC>",

"position" : 5

}

]

}

看下英文

GET _analyze

{

"analyzer": "standard",

"text": "I love my contury"

}

{

"tokens" : [

{

"token" : "i",

"start_offset" : 0,

"end_offset" : 1,

"type" : "<ALPHANUM>",

"position" : 0

},

{

"token" : "love",

"start_offset" : 2,

"end_offset" : 6,

"type" : "<ALPHANUM>",

"position" : 1

},

{

"token" : "my",

"start_offset" : 7,

"end_offset" : 9,

"type" : "<ALPHANUM>",

"position" : 2

},

{

"token" : "contury",

"start_offset" : 10,

"end_offset" : 17,

"type" : "<ALPHANUM>",

"position" : 3

}

]

}

这就是最基本的索引了,上面是比较简单的分词器,稍后会加上比较好的中文分词器(ik),在搞个中文拼音就可以满足基本需求了,至于自定义词库、智能搜索纠错这些东西都可以后上。